curl-library

Re: ftp enhancement - FTP wildcard download

Date: Thu, 31 Dec 2009 02:59:19 +0100

> Hey, sorry it took me a while to respond. Christmas and all got in the

> way! ;-)

Thanks for response! My respond has taken long time too, absolutly

understand, christmas...

> I would prefer that metadata callback to get called before any data is

> delivered, so that an app could get to know the file name etc before any

> data is downloaded.

Yes, calling this callback means starting of new file transfer and means

end of previous file transfer (gives info about download result, bytes

transferred, etc.)

> That metadata callback could then also perhaps be allowed to skip files

> the client deems unnecessary to get...

Nice! I haven't thought about it, and it is really clever idea!

> I would also like to see the entire wildcard concept disabled by default

> and only enabled with a standard libcurl option so that it won't break

> any existing apps.

Yep. That is necessary and it relates to escaping wildcard-characters to

standard meaning. Resp. un-escaping them in libcurl.

> As I also mentioned on IRC, the concept should be protocol agnostic

> enough so that it can be introduced for SFTP and FILE as well, should

> someone get the inspiration to do it.

I'm going to try do this the best way for "future" .. for simplest

upgrading other wildcard-loving protocols.

> Still not really figured out is if the matching should be done

> client-side by libcurl or server-side by the server - I expect FTP

> servers to provide different matching support for example.

I expect the same behaviour - that is reason why I'd rather do this

client-side (unfortunately).

> Also, if done client-side it might be easier to provide the same

> matching concept not only between different FTP servers but also in the

> future between FTP and SFTP downloads etc.

I think we should generally expect different type of LIST response too :-(

.. parsing the LIST response should! be protocol-Dependent handle which

could produce specified "protocol independent" list of

files/directories/(future maybe links)..

> I also think it's worth to consider and think about what this suggested

> feature does NOT include. Like recursive downloads. Or what happens if

> the pattern matches a directory name?

I thought about it .. I propose, that there could be new CURLOPT_RECURSIVE

or something like this and default should be "false" probably?

As Kamil said you on IRC, I'd like to implement matching with fnmatch(3),

but there could be (maybe) small problem. Function fnmatch is POSIX-like,

anyway there is problem with linking on windows -> and probably could be

on other platforms too.

On windows this function is implemented in libiberty.a - it is fine, but

there is no header file "fnmatch.h". The main reason why this header is

missing is that there could occur an arror with "windows-like" backslashes

in path (I think it now, not sure). It does not mean problem for

libcurl+FTP and many other protocols (having no backslashes in url), but

for "file://" under windows it could make troubles. (it is true only when

user wants to get "file://c:\something\*" .. not if user asked "fnmatch

friendly" "file://c:/something/*" of course)

AND as I tried under windows, matching is 100% good for our needs!

Because...

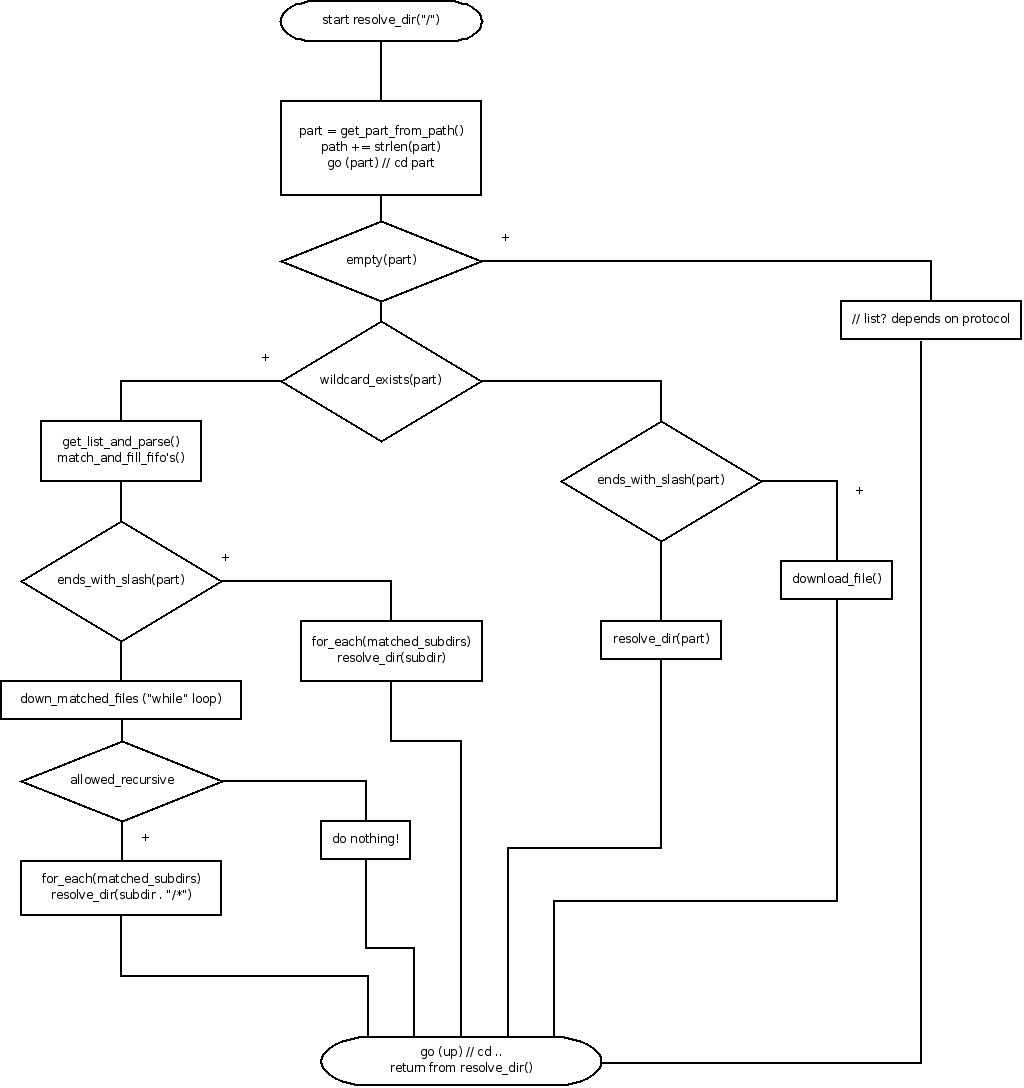

...the model I propose does not use fnmatch this "conflict" way => this

"fnmatch" dependency should not raise us "working" problems. See the

pseudocode in attachment please. I don't want to do matching on full path,

i'd like to go from root and match each path's part separately.

Backslashes are off topic!

There is only one condition. Just add "fnmatch.h" to libcurl headerfiles

.. and link with -liberty. I was talking of about windows (MinGW) of

course! under my debian and others unix-like systems that makes no stress.

Unfortunaly, I don't know how are other unusal platforms compatible with

POSIX. I'd be very happy if sombody checked this ..

My priority is "no new dependencies" and "no breaking backward

compatibility", anyway do fnmatch "effect" by myself could be relatively

complex .. :-/

In my e-mail attachement is conceptual pseudocode.c and diagram.png of

about enhancement libcurl's easy_API, multi will be adequate, but

non-blocking version. It is not necessary study "in detail" this simple

concept, but if somebody here could see this and check it (if I'm not

completely wrong), I'd be very happy. This should only describe you how I

want do this.

Note that I haven't solved progress bar! I think there are 3 cases:

1) Probably I should update progress bar for each file separately... xor I

think worse case:

2) Walk through the directory(ies) two times to count the full size of

matched files (first time) and downloading it (second time). It is

unacceptable I think.. In addition that may broke progress bar, when

skipping file in callback..

3) or I can walk through the tree once and remember size + list of matched

files, which will be later downloaded. (it is wasting memory and it brakes

progress bar too)

Pavel

PS: writing in english is new for me, sorry if something doesn't make

sense.. I'll do my best answer you more understandably when it is

necessary.

-------------------------------------------------------------------

List admin: http://cool.haxx.se/list/listinfo/curl-library

Etiquette: http://curl.haxx.se/mail/etiquette.html

- application/octet-stream attachment: pseudocode.c